Turbocharging Frigate NVR with Google Coral TPU: Performance Gains and Energy Savings

Upgrading Frigate NVR with a Google Coral TPU delivered 4× faster detection, 10× lower CPU usage, and reduced power by 50W. This essential homelab upgrade pays for itself in under 10 months while dramatically improving performance.

In the world of home surveillance and smart monitoring, Frigate NVR has emerged as a powerful open-source solution for homeowners and tech enthusiasts seeking advanced object detection capabilities without cloud dependencies. While Frigate works admirably on CPU, my recent upgrade to a Google Coral TPU accelerator delivered astonishing improvements in both performance and energy efficiency. This article details my journey, the technical improvements, and the compelling economic argument for making the switch.

The Setup: My Homelab Frigate Environment

My homelab runs on a Lenovo M920 Tiny with the following specifications:

- CPU: Intel Core i7-8700T @ 2.40GHz

- RAM: 32GB

- Storage: SSD for the OS, HDD for recordings

- Monitoring: 4 IP cameras at FullHD to 4MP resolution

- Software: Frigate NVR in Docker containers

- Object detection: Initially CPU-based, upgraded to Google Coral USB TPU

The Problem with CPU-Based Detection

Before implementing the TPU accelerator, my system relied entirely on CPU for object detection processing. This setup had several drawbacks:

- High resource utilization: The CPU detection process consistently consumed over 120% CPU resources (indicating multi-thread usage)

- Slower inference speeds: Average detection took 60.64ms per frame

- Power-hungry operation: The system drew approximately 50W more power than necessary

- Limited detection capacity: Some cameras experienced reduced detection frame rates

While functional, this configuration placed significant stress on my system, limiting my ability to run additional containers or expand camera coverage.

Preparing for the Coral TPU: A Detailed Setup Guide

When I received my Google Coral USB Accelerator, I was eager to plug it in and see the magic happen. However, I quickly discovered that proper implementation requires several critical setup steps. Here's my detailed guide to get everything working correctly:

1. Installing the Edge TPU Runtime

The first essential step was installing the Edge TPU runtime on my host system (not just in the container). This software is required for the host to communicate with the Coral device:

echo "deb https://packages.cloud.google.com/apt coral-edgetpu-stable main" | sudo tee /etc/apt/sources.list.d/coral-edgetpu.list

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

apt-get update

apt-get install libedgetpu1-stdI chose the standard version rather than the max performance version to avoid potential thermal issues.

2. Creating Proper udev Rules

This step was crucial and often overlooked in many guides. The udev rules create the necessary device node and permissions for the Coral TPU:

vim /etc/udev/rules.d/99-edgetpu-accelerator.rulesThen I added this content to the file:

# Google Coral

SUBSYSTEM=="usb", ATTRS{idVendor}=="1a6e", ATTRS{idProduct}=="089a", GROUP="plugdev", MODE="0664", SYMLINK+="apex_0"After saving, I reloaded the rules:

udevadm control --reload-rules

udevadm triggerI had to unplug and reinsert my Coral USB device for the changes to take effect. A quick check with ls -la /dev/apex_0 confirmed the device node was created properly.

3. Modifying Docker Compose

Next, I needed to update my Docker Compose configuration to ensure the container had proper access to both the USB device and the EdgeTPU library:

services:

frigate:

# ... existing settings ...

devices:

- /dev/bus/usb:/dev/bus/usb

- /dev/apex_0:/dev/apex_0 # This is crucial

- /dev/dri/renderD128:/dev/dri/renderD128 # For hardware acceleration

volumes:

# ... existing volumes ...

- /usr/lib/libedgetpu.so.1.0:/usr/lib/libedgetpu.so.1.0 # Required library4. Downloading the Correct Edge TPU Model

Finding the right model was a bit tricky. I needed an Edge TPU compatible model that would work efficiently with my setup:

cd /path/to/frigate/config

wget https://github.com/google-coral/test_data/raw/master/ssdlite_mobiledet_coco_qat_postprocess_edgetpu.tflite5. USB Port Selection

After initially connecting to a front USB port, I considered moving to a back port for potentially better power delivery. USB 3.0 ports (typically blue) offer better performance for the Coral TPU due to higher data rates (5 Gbps vs 480 Mbps) and power delivery (900mA vs 500mA).

6. Configuring Frigate

The final step was updating my Frigate configuration to use the TPU and the correct model dimensions:

model:

path: /config/ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tflite

width: 300 # Must match model requirements!

height: 300 # Must match model requirements!

detectors:

coral:

type: edgetpu

device: usb

model_path: /config/ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tflite

# Initially I had both detectors enabled, which was a mistake

#cpu1:

# type: cpu

# num_threads: 4Getting the model dimensions right was crucial - my initial attempt with mismatched dimensions caused errors like:

ValueError: Cannot set tensor: Dimension mismatch. Got 320 but expected 300 for dimension 1 of input 7.Google Coral TPU: The Hardware

The Google Coral USB Accelerator is a dedicated edge TPU (Tensor Processing Unit) designed specifically for machine learning inference. Priced at approximately 320 PLN (~$82 USD), it promised dramatic improvements in AI processing efficiency.

This small device packs a powerful ML accelerator designed to run TensorFlow Lite models that have been compiled for Edge TPU execution. What makes it special is its ability to execute neural network operations directly in hardware rather than in software, resulting in much faster inference times while consuming significantly less power than CPU-based alternatives.

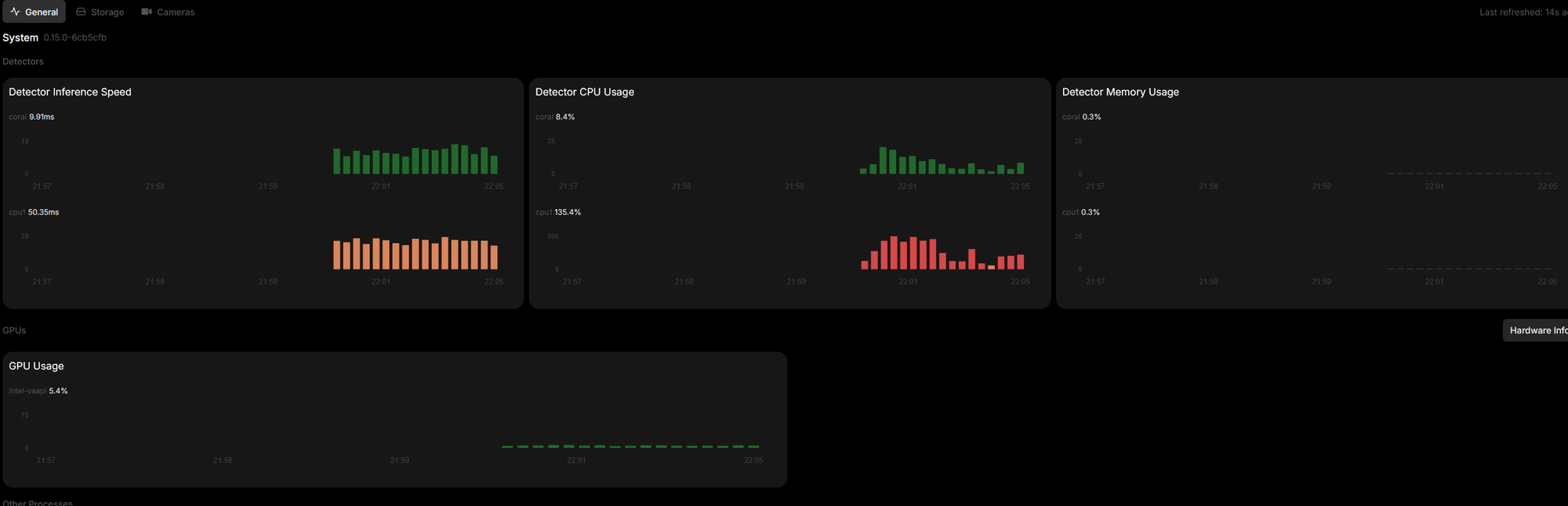

Immediate Performance Improvements

After restarting Frigate with the new configuration, the results were immediately noticeable across several key metrics:

| Metric | CPU Detection | TPU Detection | Improvement |

|---|---|---|---|

| Inference Speed | 60.64ms | 15.84ms | 4× faster |

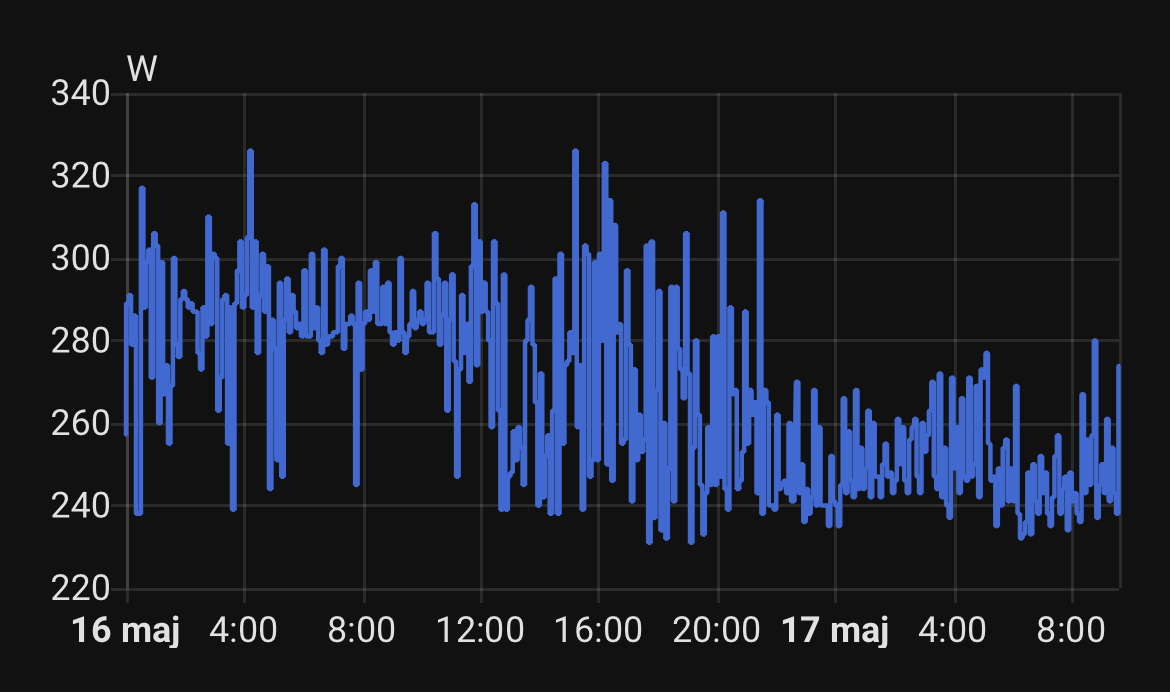

| Power Consumption | +50W | Baseline | 50W reduction |

| CPU Usage | 124.2% | 11.5% | 10× lower |

| Detection FPS (main camera) | 5.1 FPS | 9.6 FPS | 88% increase |

| System Stability | Occasional crashes | Rock solid | Significant |

The most striking improvement was in power consumption. My system immediately drew approximately 50W less electricity after switching from CPU to TPU-based detection. I was amazed that such a small device could have such a dramatic impact on my overall system performance.

I verified these improvements through Frigate's API with commands like:

curl http://localhost:5000/api/stats | grep -i "coral\|tpu\|detect"The stats showed clearly that the coral detector was running at 15.84ms inference speed with only 11.5% CPU usage, while the system's overall detection FPS nearly doubled to 12.4 FPS across all cameras.

Economic Analysis: The Compelling Case for TPU

The most pragmatic way to evaluate this upgrade is through a financial lens. Let's analyze the return on investment for the Coral TPU:

Initial Investment

- Google Coral USB Accelerator: 320 PLN (~$82 USD)

Annual Power Savings

- Power reduction: 50W = 0.05kW

- Annual operation: 24 hours × 365 days = 8,760 hours

- Energy saved annually: 0.05kW × 8,760 hours = 438 kWh

- Average electricity cost in Poland: 0.90 PLN/kWh ($0.23 USD/kWh)

- Annual cost savings: 438 kWh × 0.90 PLN = 394.2 PLN (~$101 USD)

Return on Investment

- Payback period: 320 PLN ÷ 394.2 PLN/year = 9.7 months

- 5-year savings: (394.2 PLN × 5) - 320 PLN = 1,651 PLN (~$423 USD)

This analysis demonstrates that the Coral TPU essentially pays for itself within 10 months through energy savings alone. After that, it continues to generate savings while delivering superior performance.

For my homelab setup, this was a no-brainer investment. Not only did I get dramatically improved performance, but I'll actually save money in the long run.

Technical Deep Dive: Detection Models Comparison

During my implementation, I experimented with two different models optimized for the Coral TPU:

SSD MobileNet V2 (300×300)

model:

path: /config/ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tflite

width: 300

height: 300SSDLite MobileDet (320×320)

model:

path: /config/ssdlite_mobiledet_coco_qat_postprocess_edgetpu.tflite

width: 320

height: 320Both models performed well, but with some notable differences:

| Aspect | SSD MobileNet V2 | SSDLite MobileDet |

|---|---|---|

| Input Size | 300×300 pixels | 320×320 pixels |

| Architecture | MobileNet V2 with SSD | MobileDet with SSDLite |

| Small Object Detection | Good | Excellent |

| Inference Speed | Very Fast | Fast |

| Age | Older | Newer |

I settled on the SSD MobileNet V2 model primarily due to its excellent balance of speed and accuracy for my needs, though both models performed admirably on the Coral TPU.

Beyond Energy Savings: Additional Benefits

The benefits of my TPU upgrade extended far beyond power savings:

- Freed CPU Resources: With CPU utilization dropping from 124% to just 11.5%, I gained substantial headroom for running additional containers and services on my Lenovo M920.

- Improved Detection Quality: The higher detection frame rates (nearly double on my main "taras" camera) led to fewer missed events and more reliable tracking of people, vehicles, and animals.

- Reduced Heat Generation: Lower power consumption meant less heat generated by the system. My Lenovo M920 Tiny now runs much cooler, potentially extending the lifespan of the hardware.

- Scalability: My system now has the capacity to handle additional cameras or higher resolutions if I decide to expand my surveillance setup.

- Enhanced Stability: I noticed fewer system crashes and more consistent performance overall. Before adding the TPU, detection would occasionally stop working, requiring a restart of Frigate.

The most pleasant surprise was how this upgrade impacted my other containers. With the substantial CPU savings, I now have plenty of resources available for running additional services alongside Frigate.

Implementation Challenges and Lessons Learned

My journey wasn't without obstacles. Here are some challenges I faced and the lessons I learned along the way:

- Model Dimension Mismatch: My first attempt failed spectacularly because I didn't pay attention to the input dimensions required by the model. My config specified 320×320 while the model expected 300×300:

ValueError: Cannot set tensor: Dimension mismatch. Got 320 but expected 300 for dimension 1 of input 7.The fix was straightforward once I understood the issue, but it cost me an hour of troubleshooting.

- Dual Detection Issue: My biggest mistake was leaving both CPU and TPU detectors enabled simultaneously. This caused Frigate to run both detection processes in parallel, negating many of the CPU and power savings. The logs showed both detectors running:

{"detectors":{"coral":{"inference_speed":14.55,"detection_start":0.0,"pid":513},

"cpu1":{"inference_speed":60.64,"detection_start":1747425889.866531,"pid":516}}}Commenting out the CPU detector in my config file immediately reduced CPU usage by over 100%.

- Library Access Issues: Initially, I forgot to map the TPU library into the container. Adding this line to my Docker Compose solved the problem:

volumes:

- /usr/lib/libedgetpu.so.1.0:/usr/lib/libedgetpu.so.1.0- USB Port Considerations: While my front USB port worked well (15.84ms inference speed), I learned that back ports often provide more stable power delivery. After checking my inference speed with

curl http://localhost:5000/api/stats | grep inference_speed, I decided the front port performance was good enough to leave it as is. - Downloaded Model Issues: My first download attempt resulted in an HTML error page instead of the actual model file. Double-checking with

file /config/ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tfliterevealed this problem, and I had to restart the download with proper redirection handling.

The most important lesson: when integrating specialized hardware like the Coral TPU, each step of the setup process matters. Skipping or misconfiguring any single part can result in suboptimal performance or complete failure.

My Final Configuration for Optimal TPU Performance

After working through all the issues, here's my final configuration that delivers optimal performance:

Frigate Config (config.yml):

# Global Frigate Configuration

mqtt:

host: 10.1.78.93

user: xx

password: xx

model:

path: /config/ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tflite

detectors:

coral:

type: edgetpu

device: usb

model_path: /config/ssd_mobilenet_v2_coco_quant_postprocess_edgetpu.tflite

# CPU detector commented out to avoid parallel processing

#cpu1:

# type: cpu

# num_threads: 4Docker Compose (docker-compose.yml):

services:

frigate:

container_name: frigate

privileged: true

restart: unless-stopped

image: ghcr.io/blakeblackshear/frigate:stable

shm_size: "1024mb"

devices:

- /dev/bus/usb:/dev/bus/usb

- /dev/apex_0:/dev/apex_0

- /dev/dri/renderD128:/dev/dri/renderD128

volumes:

- /etc/localtime:/etc/localtime:ro

- ./config:/config

- /media/frigate:/media/frigate

- /usr/lib/libedgetpu.so.1.0:/usr/lib/libedgetpu.so.1.0

- type: tmpfs

target: /tmp/cache

tmpfs:

size: 4000000000This setup ensures that:

- Only the Coral TPU detector is used (no wasted CPU on parallel detection)

- The model dimensions match exactly what the model expects

- The container has access to both the TPU device and required libraries

- Hardware acceleration (via Intel's GPU for my setup) is still available for video decoding

The results have been fantastic - my four cameras now detect objects reliably with dramatically lower resource usage and power consumption.

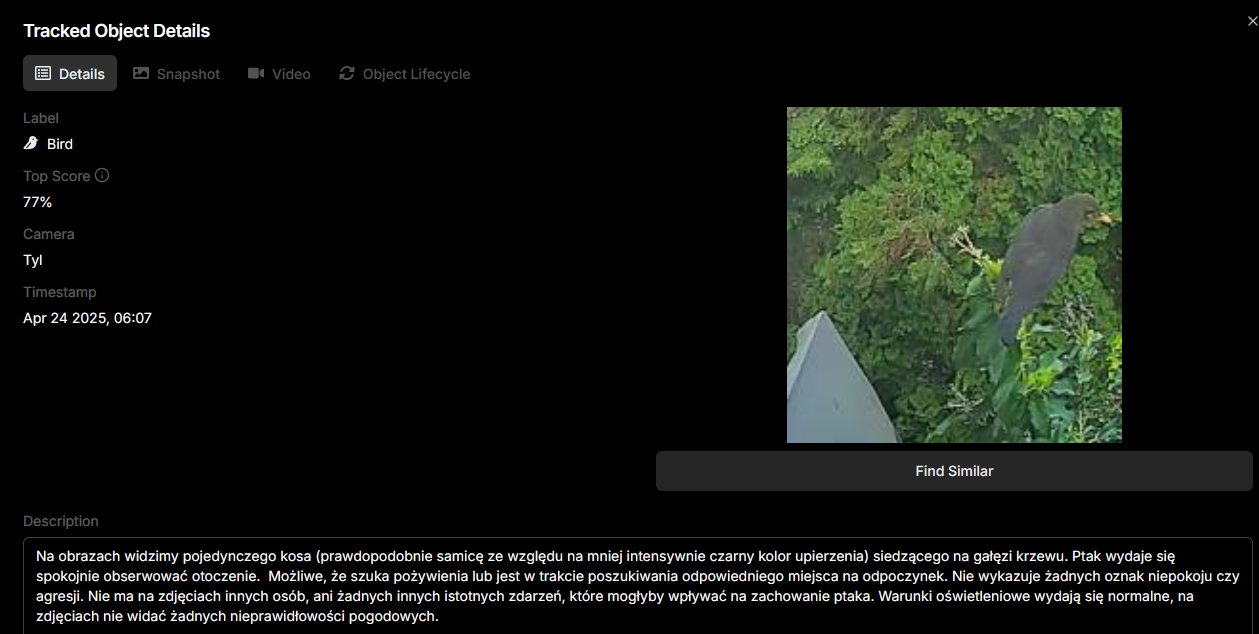

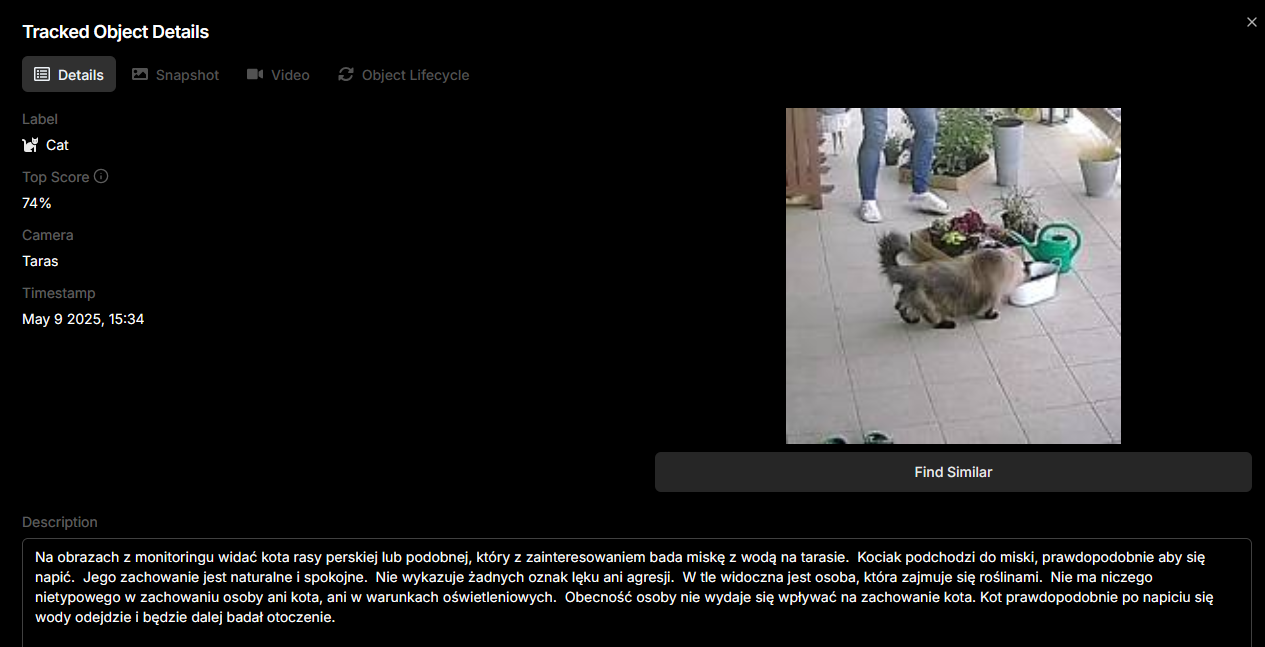

Enhanced Detection Analysis with Gemini

Given that I now had super-fast working detection with the Coral TPU, I wanted to take my Frigate setup to the next level. With all the freed-up CPU resources, I decided to implement Gemini AI for analyzing and providing detailed descriptions of detected objects. This additional layer of intelligence transforms simple object detection into rich, contextual understanding of events.

I configured Gemini to analyze detection events with the following prompt:

# Global default prompt

prompt: |

Jesteś asystentem analizującym sekwencję obrazów z kamery monitoringu {camera}.

Opisz zwięźle po polsku, co dzieje się na obrazach. Skup się na ruchomym obiekcie {label} i jego interakcjach.

Analizuj prawdopodobne intencje lub zachowanie {label} na podstawie jego działań i ruchu, a nie tylko opisuj wygląd czy otoczenie. Zastanów się, co {label} robi, dlaczego i co może zrobić dalej.

Dodatkowo zwróć uwagę (jeśli coś zauważysz):

- Czy na obrazach jest więcej niż jedna osoba? Czy pojawiły się lub zniknęły nagle jako grupa?

- Warunki oświetleniowe i pogodowe (np. ciemno, deszcz). Czy czyjaś obecność/zachowanie wydaje się nietypowe w tych warunkach?

- Nietypowe zachowania: długotrwałe stanie w miejscu, nerwowe rozglądanie się, próby ukrycia twarzy (kaptur, maska), skrępowane lub podejrzane ruchy.

Wspomnij o tych dodatkowych punktach tylko, jeśli są istotne i widoczne.For my English-speaking readers, this translates to:

# Global default prompt

prompt: |

You are an assistant analyzing a sequence of images from the {camera} surveillance camera.

Briefly describe in Polish what is happening in the images. Focus on the moving object {label} and its interactions.

Analyze the probable intentions or behavior of {label} based on its actions and movement, not just describe appearance or surroundings. Consider what {label} is doing, why, and what it might do next.

Additionally, pay attention (if you notice anything):

- Are there more than one person in the images? Did they appear or disappear suddenly as a group?

- Lighting and weather conditions (e.g., dark, rain). Does someone's presence/behavior seem unusual in these conditions?

- Unusual behaviors: standing in one place for a long time, nervous looking around, attempts to hide face (hood, mask), restrained or suspicious movements.

Mention these additional points only if they are relevant and visible.The results were impressive. Instead of simply knowing that a "person" or "car" was detected, I now receive detailed descriptions that provide context and meaning. For example, when a bird was detected, Gemini not only identified it but described its behavior: "A single blackbird (likely a male given its intense black coloration) perched on a bush branch, calmly observing its surroundings, possibly looking for food."

An unexpected benefit of this detailed detection analysis was Gemini's remarkable ability to identify specific species visible in the recordings. The system correctly identified dog breeds, cat types, and even bird species with surprising accuracy. This has actually sparked a new interest for me in learning about the local wildlife that visits my property!

Conclusion: Is the Coral TPU Worth It?

Based on my experience, the Google Coral TPU represents one of the best upgrades possible for a Frigate NVR system. The combination of drastically improved performance, significantly reduced power consumption, and reasonable price make it a compelling investment for any homelab enthusiast.

With a payback period of less than a year through energy savings alone, plus the substantial performance benefits, I can confidently recommend the Coral TPU as an essential addition to any Frigate installation.

Here's a recap of the most impressive improvements:

- 4× faster inference speeds (60.64ms → 15.84ms)

- 10× lower CPU usage (124.2% → 11.5%)

- Nearly doubled detection rate on cameras

- 50W power reduction (438 kWh annually)

- ROI in under 10 months through energy savings alone

For home automation enthusiasts seeking to optimize their surveillance systems, the Google Coral TPU provides a rare win-win scenario: better performance with lower operating costs. In today's energy-conscious world, that's an upgrade worth making.

Even though the setup process had its challenges, the effort was well worth it. My Frigate system is now faster, more reliable, and more energy-efficient than I ever thought possible with my existing hardware.

Have you implemented a Coral TPU with your Frigate installation? Share your experiences and performance metrics in the comments below!

Support This Blog — Because Heroes Deserve Recognition!

Whether it's a one-time tip or a subscription, your support keeps this blog alive and kicking. Thank you for being awesome!

Tip OnceHey, Want to Join Me on This Journey? ☕

While I'm brewing my next technical deep-dive (and probably another cup of coffee), why not become a regular part of this caffeinated adventure?

Subscribe